我是靠谱客的博主 傲娇篮球,这篇文章主要介绍ROCT SVM地址空间管理一、什么是canonical address二、x86_64 Linux 地址空间布局三、ROCT如何管理SVM,现在分享给大家,希望可以做个参考。

一、什么是canonical address

In 64-bit mode, an address is considered to be in canonical form if address bits 63 through to the most-significant implemented bit by the microarchitecture are set to either all ones or all zeros.

(注意:这里most-significant就是bit47<从bit0开始>)

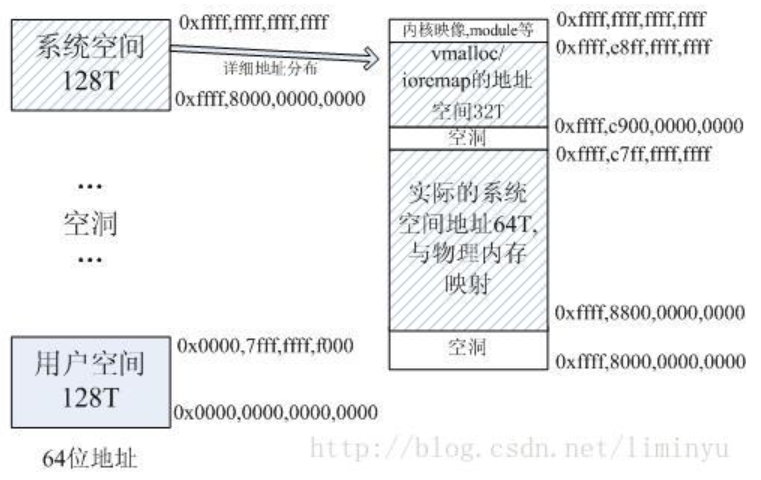

二、x86_64 Linux 地址空间布局

显然,x86_64的地址是canonical的。

三、ROCT如何管理SVM

注意:把这段代码中两个mmap的区别搞清楚,这篇文章的目的就达到了。

//init_svm_apertures会调用的函数

init_mmap_apertures

{

//1.初始化svm.apertures[SVM_DEFAULT]结构

svm.apertures[SVM_DEFAULT].base = (void *)base;

svm.apertures[SVM_DEFAULT].limit = (void *)limit;

svm.apertures[SVM_DEFAULT].align = align;

svm.apertures[SVM_DEFAULT].guard_pages = guard_pages;

svm.apertures[SVM_DEFAULT].is_cpu_accessible = true;

svm.apertures[SVM_DEFAULT].ops = &mmap_aperture_ops;

/*

* 2.用mmap匿名映射的方式分配一页内存,如果分配成功这里就会直接返回(为了测试SVM区域可用)

* Try to allocate one page. If it fails, we'll fall back to

* managing our own reserved address range.

*/

addr = aperture_allocate_area(&svm.apertures[SVM_DEFAULT], NULL, PAGE_SIZE);

return app->ops->allocate_area_aligned(app, address, MemorySizeInBytes, app->align);

mmap_aperture_allocate_aligned

//从这里可以看出,这里并没有强制让CPU addr等于SVM中的地址,原因是整个进程地址空间都是SVM的,当我们用

//mmap匿名映射的方式分配出一段进程虚拟地址,这个进程虚拟地址肯定是落在SVM aperture中的。然后我们再将

//这个进程虚拟地址告诉KFD,同样能达到CPUVA和GPUVA相等的效果。

addr = mmap(0, aligned_padded_size, PROT_NONE, MAP_ANONYMOUS | MAP_NORESERVE | MAP_PRIVATE, -1, 0);

}

//fmm_init_process_apertures会调用的函数

init_svm_apertures

{

/* If the limit is greater or equal 47-bits of address space,

* it means we have GFXv9 or later GPUs only. We don't need

* apertures to determine the MTYPE and the virtual address

* space of the GPUs covers the full CPU address range (on

* x86_64) or at least mmap is unlikely to run out of

* addresses the GPUs can handle.

* 该if条件成立,说明是进程地址空间全SVM

*/

if (limit >= (1ULL << 47) - 1 && !svm.reserve_svm) {

//1.初始化svm.apertures[SVM_DEFAULT]结构

//2.用mmap匿名映射的方式分配一页内存,如果分配成功这里就会直接返回(为了测试SVM区域可用)

HSAKMT_STATUS status = init_mmap_apertures(base, limit, align,

guard_pages);

if (status == HSAKMT_STATUS_SUCCESS)

return status;

/* fall through: fall back to reserved address space */

}

/* Try to reserve address space for SVM.

*

* Inner loop: try start addresses in huge-page increments up

* to half the VM size we're trying to reserve

*

* Outer loop: reduce size of the allocation by factor 2 at a

* time and print a warning for every reduction

* 如果非进程地址空间全SVM,那么就是在CPU进程地址空间划分一段出来作为SVM

*/

for (len = limit - base + 1; !found && len >= SVM_MIN_VM_SIZE; len = (len + 1) >> 1) {

for (addr = (void *)base, ret_addr = NULL;(HSAuint64)addr + ((len + 1) >> 1) - 1 <= limit; addr = (void *)((HSAuint64)addr + ADDR_INC)) {

//在进程地址空间预留SVM区域

ret_addr = reserve_address(addr, map_size);

//预留的CPU addr等于SVM aperture中的addr

ret_addr = mmap(addr, len, PROT_NONE, MAP_ANONYMOUS | MAP_NORESERVE | MAP_PRIVATE, -1, 0);

}

}

//初始化svm.apertures[SVM_DEFAULT]结构

svm.apertures[SVM_DEFAULT].base = dgpu_shared_aperture_base = ret_addr;

svm.apertures[SVM_DEFAULT].limit = dgpu_shared_aperture_limit = (void *)limit;

svm.apertures[SVM_DEFAULT].align = align;

svm.apertures[SVM_DEFAULT].guard_pages = guard_pages;

svm.apertures[SVM_DEFAULT].is_cpu_accessible = true;

svm.apertures[SVM_DEFAULT].ops = &reserved_aperture_ops;

}

fmm_init_process_apertures

{

//获取所有GPU node的GPUVM资源

get_process_apertures(process_apertures, &num_of_sysfs_nodes);

//遍历每一个GPU node的GPUVM资源

for (i = 0 ; i < num_of_sysfs_nodes ; i++) {

//如果是canonical address,取所有GPUVM的交集作为SVM

if (IS_CANONICAL_ADDR(process_apertures[i].gpuvm_limit)) {

if (process_apertures[i].gpuvm_base > svm_base)

svm_base = process_apertures[i].gpuvm_base;

if (process_apertures[i].gpuvm_limit < svm_limit || svm_limit == 0)

svm_limit = process_apertures[i].gpuvm_limit;

} else {

//非canonical address暂且不管

}

//KFD保留每个GPUVM

ret = acquire_vm(gpu_mem[gpu_mem_id].gpu_id, gpu_mem[gpu_mem_id].drm_render_fd);

}

//针对SVM空间的处理

if (svm_limit) {

//At least one GPU uses GPUVM in canonical address

//space. Set up SVM apertures shared by all such GPUs

ret = init_svm_apertures(svm_base, svm_limit, svm_alignment, guardPages);

}

}最后

以上就是傲娇篮球最近收集整理的关于ROCT SVM地址空间管理一、什么是canonical address二、x86_64 Linux 地址空间布局三、ROCT如何管理SVM的全部内容,更多相关ROCT内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复