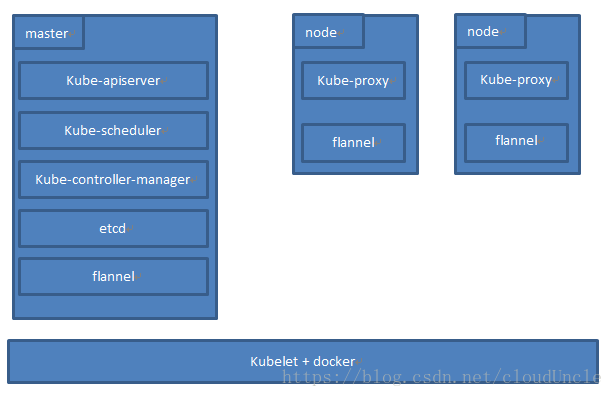

本文用kubeadm的方式部署kubernetes集群,架构图如下:

此方式将kubernetes的主要组件kube-apiserver + kube-scheduler + kube-controller-manager + etcd + flannel + kube-proxy以pod形式运行,并且各个节点包括master节点上运行kubelet和docker守护进程,并且确保kubelet和docker都启动起来

一. 准备工作

1. 将所有节点的iptables或firewalld.service禁用

由于k8s会大量的操作iptables规则,所以一定要禁用firewalld.service

//查看防火墙状态

[root@master ~]# systemctl list-unit-files|grep firewalld.service --防火墙处于开启状态

firewalld.service enabled

//停掉firewalld.service

[root@master ~]# systemctl stop firewalld.service

//禁止开启自动启动

[root@master ~]# systemctl disable firewalld.service

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

2. 关闭各节点的selinux

[root@master ~]# setenforce 0

[root@master ~]# vim /etc/selinux/config

SELINUX=enforcing ===> SELINUX=disabled

3. 同步时间

[root@master ~]# yum install -y ntpdate

[root@master ~]# ntpdate -u ntp.api.bz

4. 各node添加DNS服务,使得各node能互相ping通

将以下内容添加到/etc/hosts文件内,这样各node就能通过内部dns互相访问了

192.168.116.130 master

192.168.116.129 node1

192.168.116.128 node2

注意:首先得将各node得hostname改掉,如何修改hostname请参考我之前的博客https://blog.csdn.net/cloudUncle/article/details/82504904

二. 各节点安装docker-ce + kubelet + kubeadm + kubectl

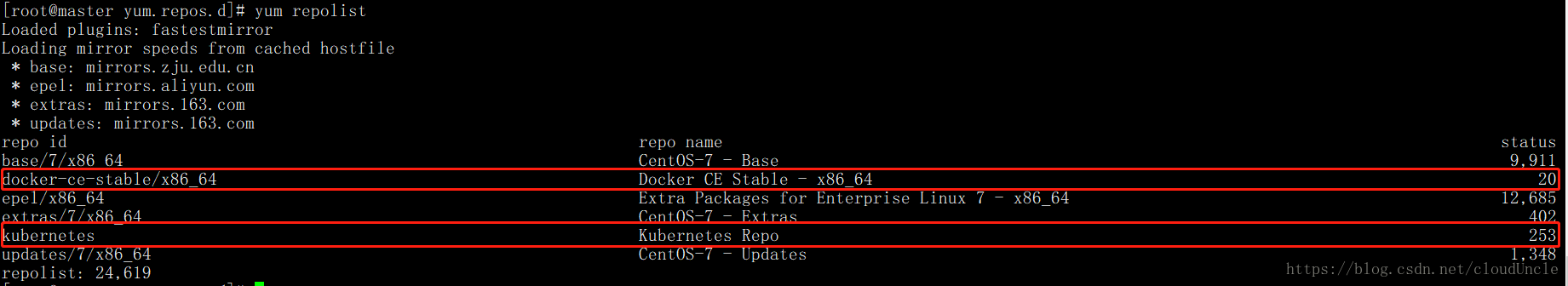

1. 添加阿里云docker和kubenetes的yum源

阿里巴巴开源镜像站地址:https://opsx.alibaba.com/mirror

添加docker-ce的yum源:

[root@master ~]# cd /etc/yum.repos.d/

[root@master yum.repos.d]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

添加kubernetes的yum源:

[root@master yum.repos.d]# cat > kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes Repo

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

> gpgcheck=1

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> enable=1

> EOF

检查yum源是否可用:

[root@master yum.repos.d]# yum repolist

由图可知两个yum源都可用

2. 安装docker + kubeadm + kubectl + kubelet

[root@master ~]# yum install -y docker-ce kubelet kubeadm kubectl

三. 配置各节点的docker以及kubelet

由于docker随后会大量的操作iptables,故还有一点需要确认nf-call的值是否为1:

[root@master ~]# cat /proc/sys/net/bridge/bridge-nf-call-iptables

1

[root@master ~]# cat /proc/sys/net/bridge/bridge-nf-call-ip6tables

1

若nf-call的值不为1,则在/etc/sysctl.conf文件中加入以下内容:

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

并执行命令:

[root@master ~]# sysctl -p

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

若提示以下错误:

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory

解决方法:

[root@localhost ~]# modprobe br_netfilter

[root@localhost ~]# ls /proc/sys/net/bridge

bridge-nf-call-arptables bridge-nf-filter-pppoe-tagged

bridge-nf-call-ip6tables bridge-nf-filter-vlan-tagged

bridge-nf-call-iptables bridge-nf-pass-vlan-input-dev

[root@localhost ~]# sysctl -p

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

设置kubelet/docker开机自启动:

[root@master ~]# systemctl enable kubelet

[root@master ~]# systemctl enable docker

配置kubelet忽略swap:

[root@master ~]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

保存退出。

四. 使用kubeadm部署kubernetes集群

下载K8S相关镜像,下载后将镜像名改为k8s.gcr.io开头的名字,以便kubeadm识别,在master节点上执行以下脚本:

#!/bin/bash

images=(kube-proxy-amd64:v1.11.1 kube-apiserver-amd64:v1.11.1 kube-controller-manager-amd64:v1.11.1 kube-scheduler-amd64:v1.11.1 coredns:1.1.3 etcd-amd64:3.2.18 pause:3.1)

for imageName in ${images[@]}; do

docker pull registry.cn-hangzhou.aliyuncs.com/k8sth/${imageName}

docker tag registry.cn-hangzhou.aliyuncs.com/k8sth/${imageName} k8s.gcr.io/${imageName}

docker rmi registry.cn-hangzhou.aliyuncs.com/k8sth/${imageName}

done

执行Master节点的初始化:

[root@master ~]# kubeadm init --kubernetes-version=v1.11.1 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

...

...

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.116.130:6443 --token 5b726v.o7glxwxrjwm4i9yy --discovery-token-ca-cert-hash sha256:d2a89ee2f04c326840cc4bf163fab4236f5c7006da9eb9e15cbe60ca026ea8ec

如果出现以上的信息,那么恭喜你,你已经初始化成功了。

记下kubeadm join …命令,后面将node加入集群中需要用到。

按照以上输出在master执行以下命令,是的kubectl命令可以使用:

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 52m v1.11.3

这样kubectl命令就可以使用了。

使用kubectl get nodes命令可以看到master节点处于NotReady状态,这是为什么呢 ?

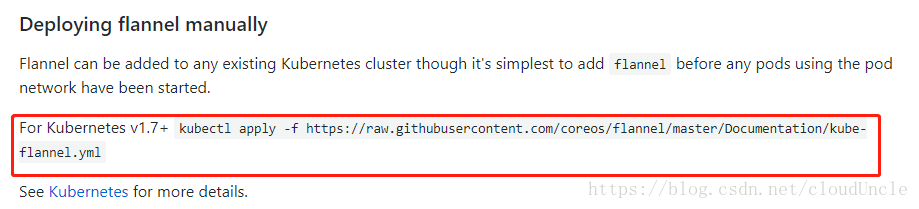

这是因为还缺少一个重要的网络插件(本文使用flannel)

flannel github地址:https://github.com/coreos/flannel

参考README

执行以下命令:

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

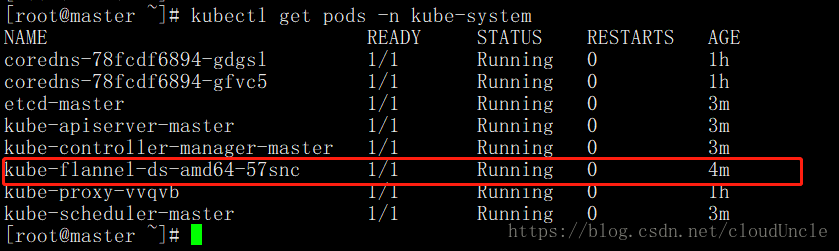

再使用kubect get nodes查看节点状态:

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 1h v1.11.3

此时master节点就处于Ready状态了。同时flannel的pod也处于Running状态

在各node节点上执行记下的kubeadm join命令,将node节点加入到集群中(同样需要忽略Swap):

[root@node1 ~]# kubeadm join 192.168.116.130:6443 --token 5b726v.o7glxwxrjwm4i9yy --discovery-token-ca-cert-hash sha256:d2a89ee2f04c326840cc4bf163fab4236f5c7006da9eb9e15cbe60ca026ea8ec --ignore-preflight-errors=Swap

.......

.......

This node has joined the cluster:

* Certificate signing request was sent to master and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

在master节点上执行:

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 1h v1.11.3

node1 NotReady <none> 10m v1.11.3

node2 NotReady <none> 8m v1.11.3

可以看到两个node节点都处于NotReady状态,可以使用以下命令在master节点上查看pod的状态:

[root@master ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE

coredns-78fcdf6894-gdgsl 1/1 Running 0 1h 10.244.0.3 master

coredns-78fcdf6894-gfvc5 1/1 Running 0 1h 10.244.0.2 master

etcd-master 1/1 Running 0 35m 192.168.116.130 master

kube-apiserver-master 1/1 Running 0 35m 192.168.116.130 master

kube-controller-manager-master 1/1 Running 0 35m 192.168.116.130 master

kube-flannel-ds-amd64-57snc 1/1 Running 0 36m 192.168.116.130 master

kube-flannel-ds-amd64-m44tw 0/1 Init:0/1 0 9m 192.168.116.129 node1

kube-flannel-ds-amd64-xtlsr 0/1 Init:0/1 0 8m 192.168.116.128 node2

kube-proxy-rqb2m 0/1 ContainerCreating 0 9m 192.168.116.129 node1

kube-proxy-tzv77 0/1 ContainerCreating 0 8m 192.168.116.128 node2

kube-proxy-vvqvb 1/1 Running 0 1h 192.168.116.130 master

kube-scheduler-master 1/1 Running 0 35m 192.168.116.130 master

可以看到两个node节点上的kube-flannel以及kube-proxy都没有启动起来,那是因为两个node节点上都还没有这两个pod的相关镜像,当然起不起来了,所以接下来需要将master节点上的这两个镜像copy到node节点上

[root@master ~]# docker save -o kube-proxy-amd64-v1.11.1.tar k8s.gcr.io/kube-proxy-amd64:v1.11.1

[root@master ~]# docker save -o pause-3.1.tar k8s.gcr.io/pause:3.1

[root@master ~]# docker save -o flannel-v0.10.0-amd64.tar quay.io/coreos/flannel:v0.10.0-amd64

然后将tar文件拷贝到两个节点上,使用以下命令导入:

[root@node1 ~]# docker load < kube-proxy-amd64-v1.11.1.tar

[root@node1 ~]# docker load < pause-3.1.tar

[root@node1 ~]# docker load < flannel-v0.10.0-amd64.tar

再使用以下命令查看相关pod是否Running:

[root@master ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE

coredns-78fcdf6894-gdgsl 1/1 Running 0 1h 10.244.0.3 master

coredns-78fcdf6894-gfvc5 1/1 Running 0 1h 10.244.0.2 master

etcd-master 1/1 Running 0 55m 192.168.116.130 master

kube-apiserver-master 1/1 Running 0 55m 192.168.116.130 master

kube-controller-manager-master 1/1 Running 0 55m 192.168.116.130 master

kube-flannel-ds-amd64-57snc 1/1 Running 0 57m 192.168.116.130 master

kube-flannel-ds-amd64-6xjhc 1/1 Running 0 13m 192.168.116.128 node2

kube-flannel-ds-amd64-jdt4d 1/1 Running 0 13m 192.168.116.129 node1

kube-proxy-phqhl 1/1 Running 0 13m 192.168.116.128 node2

kube-proxy-vpxcx 1/1 Running 0 13m 192.168.116.129 node1

kube-proxy-vvqvb 1/1 Running 0 1h 192.168.116.130 master

kube-scheduler-master 1/1 Running 0 55m 192.168.116.130 master

可以看到node节点上的pod都已经正常启动了,恭喜你,kubernetes集群就已经搭建成功了!

最后

以上就是爱撒娇水杯最近收集整理的关于centos7部署kubernetes集群之kubeadm一. 准备工作二. 各节点安装docker-ce + kubelet + kubeadm + kubectl三. 配置各节点的docker以及kubelet四. 使用kubeadm部署kubernetes集群的全部内容,更多相关centos7部署kubernetes集群之kubeadm一.内容请搜索靠谱客的其他文章。

发表评论 取消回复